ZFS Data Recovery: PowerVault MD1000 Failure

What Is ZFS?

ZFS is an open-source file system designed by Sun Microsystems. The ZFS filesystem takes on a lot of responsibility—more so than most file systems. Among ZFS’s bag of tricks are advanced journaling and self-healing features designed to help prevent accidental data corruption, snapshotting, and data compression and deduplication features.

You can have these things without ZFS, but what makes this file system special is that all of these features are built into ZFS instead. Instead of coming from third-party software, all of these features are handled natively by the file system itself. These features can make it harder to lose data, which is a relief for ZFS users. However, when data loss happens, these same features can add extra hurdles to jump over for our ZFS data recovery experts.

What Is RAID-Z?

One feature in particular makes ZFS an incredibly useful file system for RAID servers. ZFS’s RAID-Z capabilities allow it to behave like its own RAID controller and manage separate storage devices into efficient and redundant RAID arrays.

Like ZFS’s other advanced features, RAID-Z is baked into the file system. In many ways, RAID-Z is a bit like Windows Storage Spaces, which uses software to link multiple drives into a RAID array instead of a physical RAID controller. RAID-Z can set up a multi-drive logical volume that plays by the same rules as any standard or nested RAID level. You can also set up more esoteric volumes, like arrays with triple parity, or volumes in which part of the data is mirrored like a RAID-1 and part has RAID-5 or RAID-6-like parity.

And, also like Storage Spaces, you can make a RAID-Z array using things other than hard drives or SSDs… like USB flash drives. (Of course, there’s very little reason you’d want to do this, other than the novelty…)

As a result of ZFS itself handling all the dirty work of the RAID array, the actual hardware RAID controller in the Dell PowerVault server chassis didn’t have to do anything special to connect the drives in each server—hence why the controller was set to JBOD mode.

ZFS Data Recovery—The State of the Array

Our ZFS data recovery engineers found that in one of the two servers, two hard drives were DOA. Since the RAID-Z setup was made to act like RAID-6, providing dual parity, this was the maximum number of dead hard drives the array could sustain and still function. What likely happened was that, before the server was dismantled, one healthy drive hiccuped, causing the (physical) RAID controller to pull it offline. Some RAID controllers are very sensitive to what they perceive as imminent drive failure. They occasionally kick off a fairly healthy drive for the slightest hint of possible failure.

The hard drives in the second array were all healthy. However, its SSD cache had died. Its twin on the other array still worked—but just barely. These SSDs had, among other things, acted as log devices to contain the journaling info the ZFS filesystem needed to run its consistency checks. ZFS, like many other filesystems such as HFS+ and Ext4, uses a journaling system to log changes, such as altering or deleting files, before committing them. This system helps prevent data corruption in case of situations such as a sudden loss of power.

Logs tend to fill up the space they occupy eventually. When that happens, the journaling system usually starts overwriting itself, starting from the top (the oldest logs) and working down to the bottom. Combine this with the limited write capacities of flash memory you have inside SSDs and you have a recipe for a drive that will soon start crying out in pain.

The Dangers of Consumer-Grade Equipment in Enterprise Environments

The cells inside an SSD’s NAND chips can only be programmed and erased a set number of times. Consumer-grade SSDs (like Kingston SSDNow drives) only have a few tens of thousands of program-erase cycles per block before the chip wears out. When that happens, you can’t perform any more program-erase cycles. Essentially, there’s nothing that can be done to write any more data to the drive. This doesn’t kill the SSD, but it does make it essentially a permanently read-only storage device.

The sorry state of this Kingston SSDNow solid state drive illustrates the major pitfall of using consumer-grade equipment in enterprise environments. Enterprise environments, such as the inside of a server, subject their components to far more intensive labor than they are in home or personal circumstances.

The hard drives in your business’s server work hard, and around the clock to boot. By comparison, the drive in your home or office PC only puts in work when you’re using the computer. An enterprise-grade hard drive or SSD is specially designed to tolerate the insane workload it’s subjected to in a server. These drives will have specialized firmware. Enterprise SSDs will have a larger program-erase cycle capacity as well. That way they can handle the kind of work our client here needed them to perform.

Enterprise-grade hard drives and SSDs are more expensive than consumer-grade storage devices. However, the benefits make the extra expense worth it. Enterprise storage devices are far from immune to failure. But they are designed specifically to last longer and deliver better performance.

PowerVault MD1000 Data Recovery

Recovering the data from this Dell MD1000 server would take more than fitting together the server’s drives. Our server and ZFS data recovery experts would have to deal with a complicated ZFS file system and software RAID, as well as a pair of somewhat-unexpected SSDs.

The client in this ZFS data recovery case had split their twenty-eight drives into two fourteen-drive servers, contained in the same Dell PowerVault enclosure. Of the fourteen drives making up each array, one drive was a hot spare, meant to “kick in” and start pulling its weight if one other drive in the array failed. The other was a Kingston SSDNow solid state drive, which, according to the client, functioned as a high-speed cache for the array.

When we examined the servers, we found out that according to the RAID controller, both were set up with a JBOD configuration. “JBOD” stands for “just a bunch of disks”. When you link drives in a JBOD, you don’t apply any striping, mirroring, or parity. Instead, the drives just… sit there. Sometimes the drives in a JBOD get “spanned” together by the RAID controller to form a single logical volume.

These drives weren’t even spanned. They were all separate. (Funnily enough, this meant that the order of the drives wouldn’t have mattered at all.) In fact, the hardware RAID controller wasn’t responsible for turning these drives into a functional RAID array. Instead, all that work was being done by the ZFS file system, using its software RAID capabilities.

The client in this ZFS data recovery case had a Dell PowerVault MD1000. In this PowerVault were two fourteen-drive RAID server arrays. When their business relocated, they needed the servers packed up and moved as well. To their chagrin, the moving company removed all twenty-eight drives from the PowerVault server. Our client received the server in two pieces: the chassis itself, and twenty-eight drives. To make matters worse, none of the drives were labeled, so the client had no idea what order the drives went in!

The client approached us to put the drives back together. In many situations, the order of the drives does matter quite a bit. Putting the drives in a RAID server out of order can have severe consequences from the RAID controller’s point of view, even possibly leading to corruption and data loss. Our RAID data recovery specialists, though, had the necessary skills and analytical tools to puzzle out the particular order of the RAID array.

When we took a look at the server, though, we found that we’d have to do more work than just put the drives back in the right order. Both fourteen-drive RAID arrays were inaccessible by normal means. We would need to do some data recovery work, including working through its ZFS file system, to return the client’s data to them.

ZFS Data Recovery Case Study: PowerVault MD1000 Failure

Server Model: Dell PowerVault MD1000

RAID Level: RAID-Z

Total Capacity: 24 TB

Operating/File System: ZFS

Data Loss Situation: ZFS server unresponsive after relocating

Type of Data Recovered: Virtual machines

Binary Read: 100%

Gillware Data Recovery Case Rating: 9

ZFS Data Recovery Results

Our ZFS data recovery engineers rebuilt the RAID-Z arrays for the two 14-drive servers and found something very strange. We had all the client’s data. In fact, we had way more than we should have had! The two servers together had about 24 terabytes of usable space. Yet when we went to extract the data, we found ourselves looking at 56 terabytes of data!

How did ZFS cram more than double the total capacity of the array into this PowerVault?

The answer lies in one, or rather, two, of ZFS’s advanced features. ZFS can take snapshots of virtual machines and store them. These snapshots create incremental copies of the virtual machines, which the user can “roll back” to in case something goes wrong. The thing about these copies is that they take up a lot of space. However, the other thing about these copies is that they contain a lot of duplicate data. This is where ZFS’s deduplication features come in.

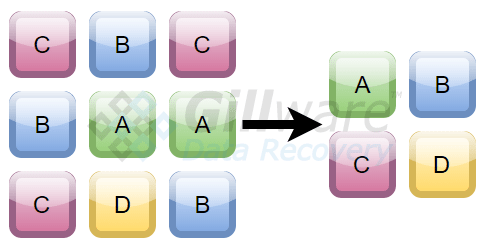

Put simply, instead of writing the same data to another part of the disk, ZFS just says, “Okay, this part of a file is identical to that part of another file. So I’ll just point to that part instead of writing the same data to a different part of the disk.”

Essentially, some spots in the logical volume get used twice (or more). However, only ZFS knows that these spots stand in for parts of multiple files. Here, for example, the servers only had about 12 terabytes of actual user data according to ZFS. But any other file system would count these sectors multiple times, leading to wildly inflated data sizes.

Nevertheless, our ZFS data recovery experts had recovered the vast majority of our client’s data. Some of the virtual machines had suffered some very minor corruption. However, the rest of the client’s data was intact. We rated this ZFS data recovery case a 9 on our ten-point scale.